Preface

A computer science degree traditionally includes courses in operating systems, compilers, and databases that replace mystery with code. These courses transform Linux, Postgres, and LLVM into improvements, additions, and optimizations of an understandable core architecture. The lesson transcends the specific system studied: all computer systems, no matter how big and seemingly complex, can be studied and understood.

But web browsers are still opaque, not just to students but to industry programmers and even to researchers. This book dissipates that mystery by systematically explaining all major components of a modern web browser.

Reading This Book

Parts 1–3 of this book construct a basic browser weighing in at around 1000 lines of code, twice that after exercises. The average chapter takes 4–6 hours to read, implement, and debug for someone with a few years’ programming experience. Part 4 of this book covers advanced topics; those chapters are longer and have more code. The final browser weighs in at about 3000 lines.

Your browserThis book assumes that you will be building a web browser along the way while reading it. However, it does present nearly all the code—inlined into the book—for a working browser for every chapter. So most of the time, the book uses the term “our browser”, which refers to the conceptual browser we (you and us, the authors) have built so far. In cases where the book is referring specifically to the implementation you have built, the book says “your browser”. will “work” at each step of the way, and every chapter will build upon the last.This idea is from J. R. Wilcox, inspired in turn by S. Zdancewic’s course on compilers. That way, you will also practice growing and improving complex software. If you feel particularly interested in some component, please do flesh it out, complete the exercises, and add missing features. We’ve tried to arrange it so that this doesn’t make later chapters more difficult.

The code in this book uses Python 3, and

we recommend you follow along in the same. When the book shows Python

command lines, it calls the Python binary python3.This is for clarity. On some

operating systems, python means Python 3, but on others

that means Python 2. Check which version you have! That

said, the text avoids dependencies where possible and you can try to

follow along in another language. Make sure your language has libraries

for TLS connections (Python has one built in), graphics (the text uses

Tk, Skia, and SDL), and JavaScript evaluation (the text uses DukPy).

This book’s browser is irreverent toward standards: it handles only a sliver of the full HTML, CSS, and JavaScript languages, mishandles errors, and isn’t resilient to malicious inputs. It is also quite slow. Despite that, its architecture matches that of real browsers, providing insight into those 10 million line of code behemoths.

That said, we’ve tried to explicitly note when the book’s browser simplifies or diverges from standards. If you’re not sure how your browser should behave in some edge case, fire up your favorite web browser and try it out.

Acknowledgments

We’d like to recognize the countless people who built the web and the various web browsers. They are wonders of the modern world. Thank you! We learned a lot from the books and articles listed in this book’s bibliography—thank you to their authors. And we’re especially grateful to the many contributors to articles on Wikipedia (especially those on historic software, formats, and protocols). We are grateful for this amazing resource, one which in turn was made possible by the very thing this book is about.

Pavel: James R. Wilcox and I dreamed up this book during a late-night chat at ICFP 2018. Max Willsey proofread and helped sequence the chapters. Zach Tatlock encouraged me to develop the book into a course. And the students of CS 6968, CS 4962, and CS 4560 at the University of Utah found countless errors and suggested important simplifications. I am thankful to all of them. Most of all, I am thankful to my wife Sara, who supported my writing and gave me the strength to finish this many-year-long project.

Chris: I am eternally grateful to my wife Sara for patiently listening to my endless musings about the web, and encouraging me to turn my idea for a browser book into reality. I am also grateful to Dan Gildea for providing feedback on my browser-book concept on multiple occasions. Finally, I’m grateful to Pavel for doing the hard work of getting this project off the ground and allowing me to join the adventure. (Turns out Pavel and I had the same idea!)

A final note

This book is, and will remain, a work in progress. Please leave

comments and mark typos; the book has built-in feedback tools, which you

can enable with Ctrl-E (or Cmd-E on a Mac).

The full source code is also available on GitHub, though

we prefer to receive comments through the built-in tools.

Browsers and the Web

I—this is Chris speaking—have known the webBroadly defined, the web is the interlinked network (“web”) of web pages on the internet. If you’ve never made a web page, I recommend MDN’s Learn Web Development series, especially the Getting Started guide. This book will be easier to read if you’re familiar with the core technologies. for all of my adult life. The web for me is something of a technological companion, and I’ve never been far from it in my studies or my work. Perhaps it’s been the same for you. And using the web means using a browser. I hope, as you read this book, that you fall in love with web browsers, just like I did.

The Browser and Me

Since I first encountered the web and its predecessors,For me, bulletin board systems (BBSs) over a dial-up modem connection. A BBS, like a browser, is a window into dynamic content somewhere else on the internet. in the early 1990s, I’ve been fascinated by browsers and the concept of networked user interfaces. When I surfed the web, even in its earliest form, I felt I was seeing the future of computing. In some ways, the web and I grew together—for example, 1994, the year the web went commercial, was the same year I started college; while there I spent a fair amount of time surfing the web, and by the time I graduated in 1999, the browser had fueled the famous dot-com speculation gold rush. Not only that, but the company for which I now work, Google, is a child of the web and was founded during that time.

In my freshman year at college, I attended a presentation by a RedHat salesman. The presentation was of course aimed at selling RedHat Linux, probably calling it the “operating system of the future” and speculating about the “year of the Linux desktop”. But when asked about challenges RedHat faced, the salesman mentioned not Linux but the web: he said that someone “needs to make a good browser for Linux”.Netscape Navigator was available for Linux at that time, but it wasn’t viewed as especially fast or featureful compared to its implementation on other operating systems. Even back then, in the first years of the web, the browser was already a necessary component of every computer. He even threw out a challenge: “How hard could it be to build a better browser?” Indeed, how hard could it be? What makes it so hard? That question stuck with me for a long time.Meanwhile, the “better Linux browser than Netscape” took a long time to appear…

How hard indeed! After eleven years in the trenches working on Chrome, I now know the answer to his question: building a browser is both easy and incredibly hard, both intentional and accidental. And everywhere you look, you see the evolution and history of the web wrapped up in one codebase. It’s fun and endlessly interesting.

So that’s how I fell in love with web browsers. Now let me tell you why you will, too.

The Web in History

The web is a grand, crazy experiment. It’s natural, nowadays, to watch videos, read news, and connect with friends on the web. That can make the web seem simple and obvious, finished, already built. But the web is neither simple nor obvious (and is certainly not finished). It is the result of experiments and research, reaching back to nearly the beginning of computing,And the web also needed rich computer displays, powerful user-interface-building libraries, fast networks, and sufficient computing power and information storage capacity. As so often happens with technology, the web had many similar predecessors, but only took its modern form once all the pieces came together. about how to help people connect and learn from each other.

In the early days, the internet was a world-wide network of computers, largely at universities, labs, and major corporations, linked by physical cables and communicating over application-specific protocols. The (very) early web mostly built on this foundation. Web pages were files in a specific format stored on specific computers. The addresses for web pages named the computer and the file, and early servers did little besides read files from a disk. The logical structure of the web mirrored its physical structure.

A lot has changed. The HyperText Markup Language (HTML) for web pages is now usually dynamically assembled on the fly“Server-side rendering” is the process of assembling HTML on the server when loading a web page. Server-side rendering can use web technologies like JavaScript and even headless browsers. Yet one more place browsers are taking over! and sent on demand to your browser. The pieces being assembled are themselves filled with dynamic content—news, inbox contents, and advertisements adjusted to your particular tastes. Even the addresses no longer identify a specific computer—content distribution networks route requests to any of thousands of computers all around the world. At a higher level, most web pages are served not from someone’s home computerPeople actually did this! And when their website became popular, it often ran out of bandwidth or computing power and became inaccessible. but from a major corporation’s social media platform or cloud computing service.

With all that’s changed, some things have stayed the same, the core building blocks that are the essence of the web:

- The web is a network of information linked by hyperlinks.

- The user uses a user agent, called a browser, to navigate the web.

- Information is requested with the HyperText Transfer Protocol (HTTP) and structured with the HTML document format.

- Documents are identified by Uniform Resource Locators (URLs), not by their content, and may be dynamically generated.

- Web pages can link to auxiliary assets in different formats, including images, videos, Cascading Style Sheets (CSS), and JavaScript.

- All these building blocks are open, standardized, and free to use or reuse.

As a philosophical matter, perhaps one or another of these principles is secondary. One could try to distinguish between the networking and rendering aspects of the web. One could abstract linking and networking from the particular choice of protocol and data format. One could ask whether the browser is necessary in theory, or argue that HTTP, URLs, and hyperlinking are the only truly essential parts of the web.

Perhaps.It is indeed true that one or more of the implementation choices could be replaced, and perhaps that will happen over time. For example, JavaScript might eventually be replaced by another language or technology, HTTP by some other protocol, or HTML by a successor. Yet the web will stay the web, because any successor format is sure to support a superset of functionality, and have the same fundamental structure. The web is, after all, an experiment; the core technologies evolve and grow. But the web is not an accident; its original design reflects truths not just about computing, but about how human beings can connect and interact. The web not only survived but thrived during the virtualization of hosting and content, specifically due to the elegance and effectiveness of this original design.

The key thing to understand is that this grand experiment is not over. The essence of the web will stay, but by building web browsers you have the chance to shape its future.

Real Browser Codebases

So let me tell you what it’s like to contribute to a browser. Some

time during my first few months of working on Chrome, I came across the

code implementing the<br>

tag—look at that, the good old <br> tag, which I’ve

used many times to insert newlines into web pages! And the

implementation turns out to be barely any code at all, both in Chrome

and in this book’s simple browser.

But Chrome as a whole—its features, speed, security, reliability—wow. Thousands of person-years went into it. There is constant pressure to do more—to add more features, to improve performance, to keep up with the “web ecosystem”—for the thousands of businesses, millions of developers,I usually prefer “engineer”—hence the title of this book—but “developer” or “web developer” is much more common on the web. One important reason is that anyone can build a web page—not just trained software engineers and computer scientists. “Web developer” also is more inclusive of additional, critical roles like designers, authors, editors, and photographers. A web developer is anyone who makes web pages, regardless of how. and billions of users on the web.

Working on such a codebase can feel daunting. I often find lines of code last touched 15 years ago by someone I’ve never met; or even now discover files and classes that I never knew existed; or see lines of code that don’t look necessary, yet turn out to be important. What does that 15-year-old code do? What is the purpose of these new-to-me files? Is that code there for a reason?

Every browser has thousands of unfixed bugs, from the smallest of mistakes to myriad mix ups and mismatches. Every browser must be endlessly tuned and optimized to squeeze out that last bit of performance. Every browser requires painstaking work to continuously refactor the code to reduce its complexity, often through the carefulBrowsers are so performance-sensitive that, in many places, merely the introduction of an abstraction—a function call or branching overhead—can have an unacceptable performance cost! introduction of modularization and abstraction.

What makes a browser different from most massive code bases is their urgency. Browsers are nearly as old as any “legacy” codebase, but are not legacy, not abandoned or half-deprecated, not slated for replacement. On the contrary, they are vital to the world’s economy. Browser engineers must therefore fix and improve rather than abandon and replace. And since the character of the web itself is highly decentralized, the use cases met by browsers are to a significant extent not determined by the companies “owning” or “controlling” a particular browser. Other people—including you—can and do contribute ideas, proposals, and implementations.

What’s amazing is that, despite the scale and the pace and the complexity, there is still plenty of room to contribute. Every browser today is open source, which opens up its implementation to the whole community of web developers. Browsers evolve like giant research projects, where new ideas are constantly being proposed and tested out. As you would expect, some features fail and some succeed. The ones that succeed end up in specifications and are implemented by other browsers. Every web browser is open to contributions—whether fixing bugs or proposing new features or implementing promising optimizations.

And it’s worth contributing, because working on web browsers is a lot of fun.

Browser Code Concepts

HTML and CSS are meant to be black boxes—declarative application programming interfaces (APIs)—where one specifies what outcome to achieve, and the browser itself is responsible for figuring out how to achieve it. Web developers don’t, and mostly can’t, draw their web pages’ pixels on their own.

That can make the browser magical or frustrating—depending on whether it is doing the right thing! But that also makes a browser a pretty unusual piece of software, with unique challenges, interesting algorithms, and clever optimizations. Browsers are worth studying for the pure pleasure of it.

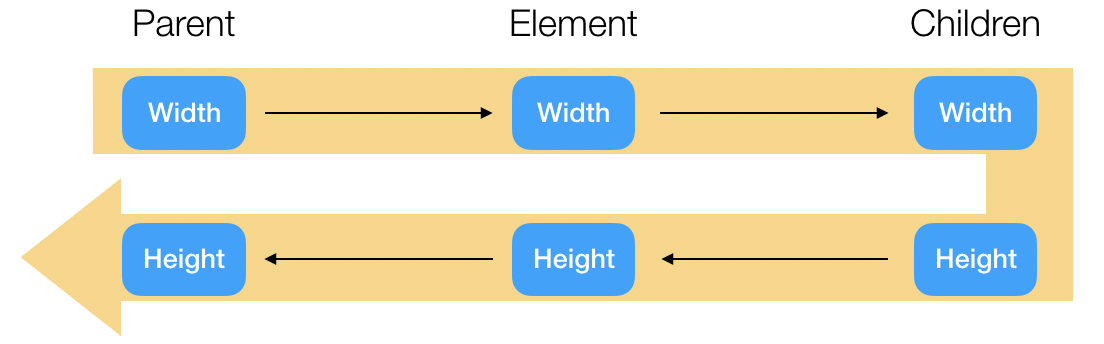

What makes that all work is the web browser’s implementations of inversion of control, constraint programming, and declarative programming. The web inverts control, with an intermediary—the browser—handling most of the rendering, and the web developer specifying rendering parameters and content to this intermediary. For example, in HTML there are many built-in form control elements that take care of the various ways the user of a web page can provide input. The developer need only specify parameters such as button names, sizing, and look-and-feel, or JavaScript extension points to handle form submission to the server. The rest of the implementation is taken care of by the browser. Further, these parameters usually take the form of constraints between the relative sizes and positions of on-screen elements instead of specifying their values directly;Constraint programming is clearest during web page layout, where font and window sizes, desired positions and sizes, and the relative arrangement of widgets is rarely specified directly. the browser solves the constraints to find those values. The same idea applies for actions: web pages mostly require that actions take place without specifying when they do. This declarative style means that from the point of view of a developer, changes “apply immediately”, but under the hood, the browser can be lazy and delay applying the changes until they become externally visible, either due to subsequent API calls or because the page has to be displayed to the user.For example, when exactly does the browser compute HTML element styles? Any change to the styles is visible to all subsequent API calls, so in that sense it applies “immediately”. But it is better for the browser to delay style recalculation, avoiding redundant work if styles change twice in quick succession. Maximally exploiting the opportunities afforded by declarative programming makes real-world browsers very complex.

There are practical reasons for the unusual design of a browser. Yes, developers lose some control and agency—when pixels are wrong, developers cannot fix them directly.Loss of control is not necessarily specific to the web—much of computing these days relies on mountains of other people’s code. But they gain the ability to deploy content on the web without worrying about the details, to make that content instantly available on almost every computing device in existence, and to keep it accessible in the future, mostly avoiding software’s inevitable obsolescence.

To me, browsers are where algorithms come to life. A browser contains a rendering engine more complex and powerful than any computer game; a full networking stack; clever data structures and parallel programming techniques; a virtual machine, an interpreted language, and a just-in-time compiler; a world-class security sandbox; and a uniquely dynamic system for storing data.

And the truth is—you use a browser all the time, maybe for reading this book! That makes the algorithms more approachable in a browser than almost anywhere else, because the web is already familiar.

The Role of the Browser

The web is at the center of modern computing. Every year the web expands its reach to more and more of what we do with computers. It now goes far beyond its original use for document-based information sharing: many people now spend their entire day in a browser, not using a single other application! Moreover, desktop applications are now often built and delivered as web apps: web pages loaded by a browser but used like installed applications.Related to the notion of a web app is a Progressive Web App, which is a web app that becomes indistinguishable from a native app through progressive enhancement. Even on mobile devices, apps often embed a browser to render parts of the application user interface (UI).The fraction of such “hybrid” apps that are shown via a “web view” is likely increasing over time. In some markets like China, “super-apps” act like a mobile web browser for web-view-based games and widgets. Perhaps in the future both desktop and mobile devices will largely be containers for web apps. Already, browsers are a critical and indispensable part of computing.

So given this centrality, it’s worth knowing how the web works. And in particular, it’s worth focusing on the browser, which is the user agentThe user agent concept views a computer, or software within the computer, as a trusted assistant and advocate of the human user. and the mediator of the web’s interactions, which ultimately is what makes the web’s principles real. The browser is also the implementer of the web: its sandbox keeps web browsing safe; its algorithms implement the declarative document model; its UI navigates links. Web pages load fast and react smoothly only when the browser is hyper-efficient.

Browsers and You

This book explains how to build a simple browser, one that can—despite its simplicity—display interesting-looking web pages and support many interesting behaviors. As you’ll see, it’s surprisingly easy, and it demonstrates all the core concepts you need to understand a real-world browser. The browser stops being a mystery when it becomes code.

The intention is for you to build your own browser as you work through the early chapters. Once it is up and running, there are endless opportunities to improve performance or add features, some of which are suggested as exercises. Many of these exercises are features implemented in real browsers, and I encourage you to try them—adding features is one of the best parts of browser development!

The book then moves on to details and advanced features that flesh out the architecture of a real browser’s rendering engine, based on my experiences with Chrome. After finishing the book, you should be able to dig into the source code of Chromium, Gecko, or WebKit and understand it without too much trouble.

I hope the book lets you appreciate a browser’s depth, complexity, and power. I hope the book passes along a browser’s beauty—its clever algorithms and data structures, its co-evolution with the culture and history of computing, its centrality in our world. But most of all, I hope the book lets you see in yourself someone building the browser of the future.

History of the Web

This chapter dives into the history of the web itself: where it came from, and how the web and browsers have evolved to date. This history is not exhaustive;For example, there is nothing much about Standard Generalized Markup Language ( SGML) or other predecessors to HTML. (Except in this footnote!) the focus is the key events and ideas that led to the web, and the goals and motivations of its inventors.

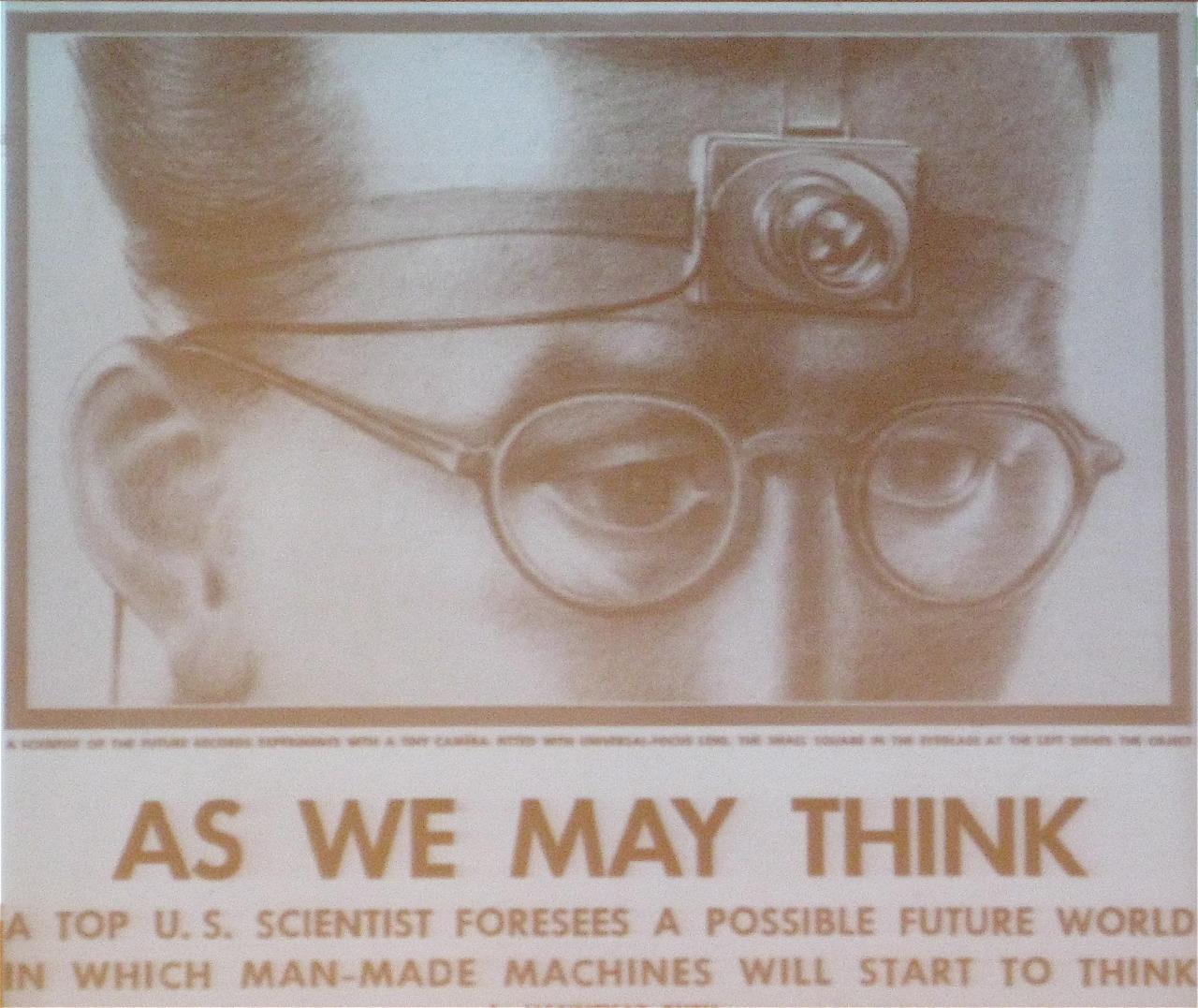

The Memex Concept

An influential early exploration of how computers might revolutionize information is a 1945 essay by Vannevar Bush entitled “As We May Think”. This essay envisioned a machine called a memex that helps an individual human see and explore all the information in the world (see Figure 1). It was described in terms of the microfilm screen technology of the time, but its purpose and concept has some clear similarities to the web as we know it today, even if the user interface and technology details differ.

The web is, at its core, organized around the Memex-like goal of representing and displaying information, providing a way for humans to effectively learn and explore. The collective knowledge and wisdom of the species long ago exceeded the capacity of a single mind, organization, library, country, culture, group or language. However, while we as humans cannot possibly know even a tiny fraction of what it is possible to know, we can use technology to learn more efficiently than before, and, in particular, to quickly access information we need to learn, remember, or recall. Consider this imagined research session described by Vannevar Bush—one that is remarkably similar to how we sometimes use the web:

The owner of the memex, let us say, is interested in the origin and properties of the bow and arrow. […] He has dozens of possibly pertinent books and articles in his memex. First he runs through an encyclopedia, finds an interesting but sketchy article, leaves it projected. Next, in a history, he finds another pertinent item, and ties the two together. Thus he goes, building a trail of many items.

Computers, and the internet, allow us to process and store the information we want. But it is the web that helps us organize and find that information, that knowledge, making it useful.Google’s well-known mission statement to “organize the world’s information and make it universally accessible and useful” is almost exactly the same. This is not a coincidence—the search engine concept is inherently connected to the web, and was inspired by the design of the web and its antecedents.

“As We May Think” highlighted two features of the memex: information record lookup, and associations between related records. In fact, the essay emphasizes the importance of the latter—we learn by making previously unknown connections between known things:

When data of any sort are placed in storage, they are filed alphabetically or numerically. […] The human mind does not work that way. It operates by association.

By “association”, Bush meant a trail of thought leading from one record to the next via a human-curated link. He imagined not just a universal library, but a universal way to record the results of what we learn.

The Web Emerges

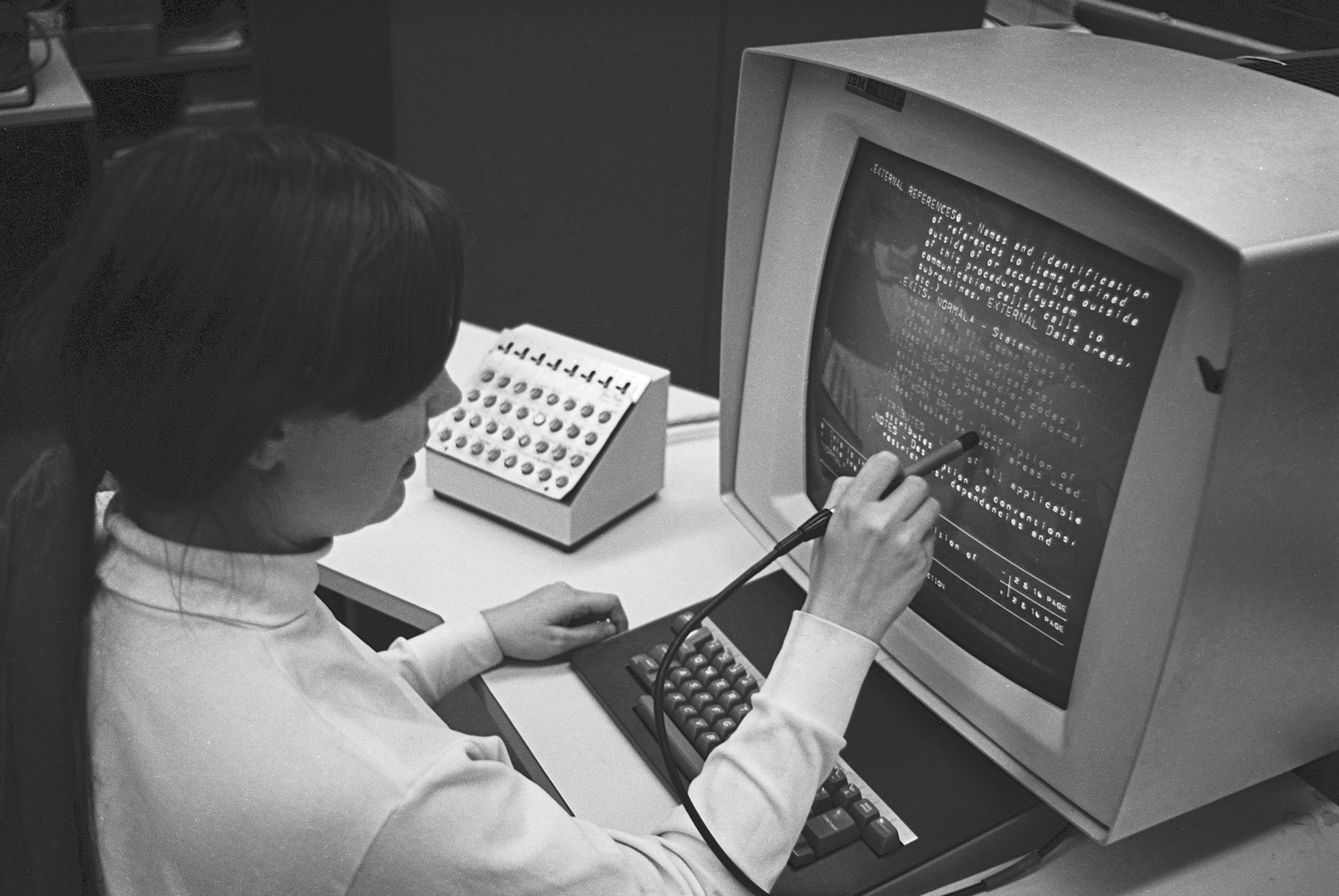

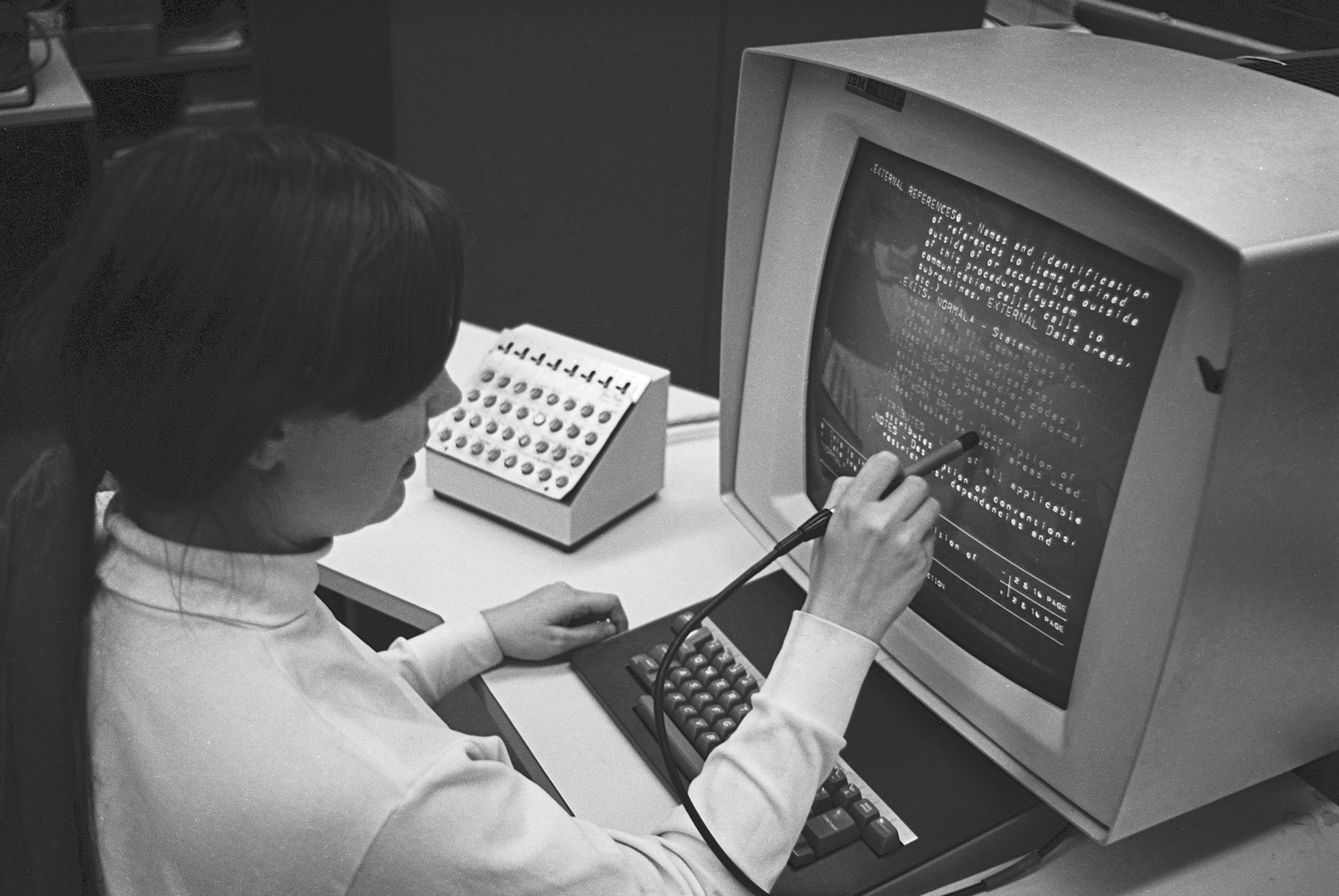

The concept of hypertext documents linked by hyperlinks was invented in 1964–65 by Project Xanadu, led by Ted Nelson.He was inspired by the long tradition of citation and criticism in academic and literary communities. The Project Xanadu research papers were heavily motivated by this use case. Hypertext is text that is marked up with hyperlinks to other text.A successor called the Hypertext Editing System was the first to introduce the back button, which all browsers now have. Since the system only had text, the “button” was itself text. Sound familiar? A web page is hypertext, and links between web pages are hyperlinks. The format for writing web pages is HTML and the protocol for loading web pages is HTTP, both of which abbreviations contain “HyperText”. See Figure 2 for an example of the early Hypertext Editing System.

Independently of Project Xanadu, the first hyperlink system appeared

for scrolling within a single document; it was later generalized to

linking between multiple documents. And just like those original

systems, the web has linking within documents as well as between them.

For example, the URL

http://browser.engineering/history.html#the-web-emerges

refers to a document called “history.html”, and

specifically to the element in it with the name

“the-web-emerges”: this section. Visiting that URL will

load this chapter and scroll to this section.

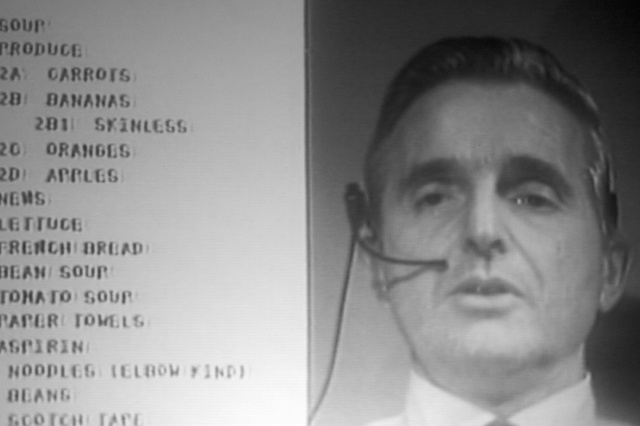

This work also formed and inspired one of the key parts of Douglas Engelbart’s mother of all demos, perhaps the most influential technology demonstration in the history of computing (see Figure 3). That demo not only showcased the key concepts of the web, but also introduced the computer mouse and graphical user interface, both of which are central components of a browser UI.That demo went beyond even this. There are some parts of it that have not yet been realized in any computer system. Watch it!

There is of course a very direct connection between this research and the document–URL–hyperlink setup of the web, which built on the hypertext idea and applied it in practice. The HyperTIES system, for example, had highlighted hyperlinks and was used to develop the world’s first electronically published academic journal, the 1988 issue of the Communications of the ACM. Tim Berners-Lee cites that 1988 issue as inspiration for the World Wide Web,Nowadays the World Wide Web is called just “the web”, or “the web ecosystem”—ecosystem being another way to capture the same concept as “World Wide”. The original wording lives on in the “www” in many website domain names. in which he joined the link concept with the availability of the internet, thus realizing many of the original goals of all this work from previous decades.Just as the web itself is a realization of previous ambitions and dreams, today we strive to realize the vision laid out by the web. (No, it’s not done yet!)

The word “hyperlink” may have been coined in 1987, in connection with the HyperCard system on Apple computers. This system was also one of the first, or perhaps the first, to introduce the concept of augmenting hypertext with scripts that handle user events like clicks and perform actions that enhance the UI—just like JavaScript on a web page! It also had graphical UI elements, not just text, unlike most predecessors.

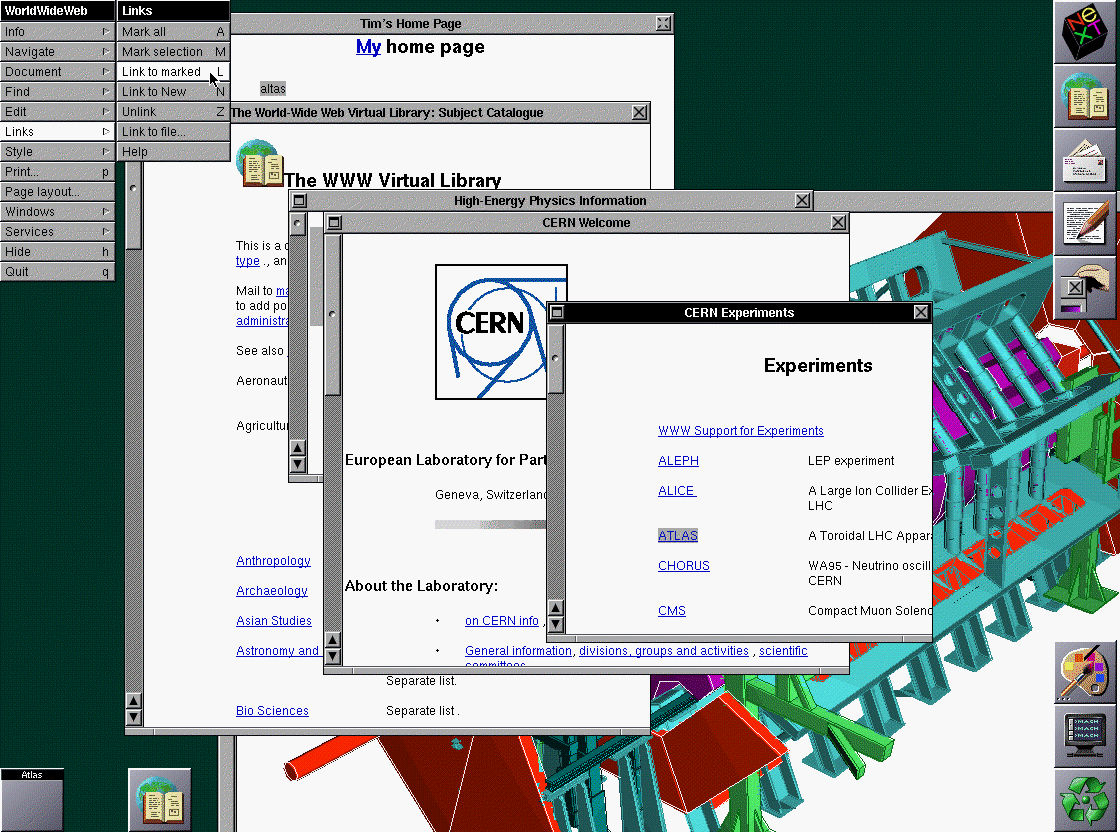

In 1989–1990, the first web browser (named WorldWideWeb, see Figure

4) and web server (named httpd, for HTTP Daemon, according

to UNIX naming conventions) were born, written by Tim Berners-Lee.

Interestingly, while that browser’s capabilities were in some ways

inferior to the browser you will implement in this book,No CSS! No JS! Not even

images! in other ways they go beyond the capabilities

available even in modern browsers.For example, the first browser included the concept of an

index page meant for searching within a site (vestiges of which exist

today in the “index.html” convention when a URL path ends in /”), and

had a WYSIWYG web page editor (the “contenteditable” HTML attribute on

DOM elements (see Chapter 16) have

similar semantic behavior, but built-in file saving is gone). Today, the

index is replaced with a search engine, and web page editors as a

concept are somewhat obsolete due to the highly dynamic nature of

today’s web page rendering. On December 20, 1990 the first web

page was created. The browser we will implement in this book is

easily able to render this web page, even today.Also, as you can see clearly,

that web page has not been updated in the meantime, and retains its

original aesthetics! In 1991, Berners-Lee advertised his

browser and the concept on the alt.hypertext

Usenet group.

Berners-Lee’s Brief History of the Web highlights a number of other key factors that led to the World Wide Web becoming the web we know today. One key factor was its decentralized nature, which he describes as arising from the academic culture of CERN, where he worked. The decentralized nature of the web is a key feature that distinguishes it from many systems that came before or after, and his explanation of it is worth quoting here (the italics are mine):

There was clearly a need for something like EnquireEnquire was a predecessor web-like database system, also written by Berners-Lee. but accessible to everyone. I wanted it to scale so that if two people started to use it independently, and later started to work together, they could start linking together their information without making any other changes. This was the concept of the web.

This quote captures one of the key value propositions of the web: its decentralized nature. The web was successful for several reasons, but they all had to do with decentralization:

Because there was no gatekeeper to doing anything, it was easy for anyone, even novices, to make simple web pages and publish them.

Because pages were identified simply by URLs, traffic could come to the web from outside sources like email, social networking, and search engines. Further, compatibility between sites and the power of hyperlinks created network effects that further strengthened the effect of hyperlinks from within the web.

Because the web was outside the control of any one entity—and kept that way via standards organizations—it avoided the problems of monopoly control and manipulation.

Browsers

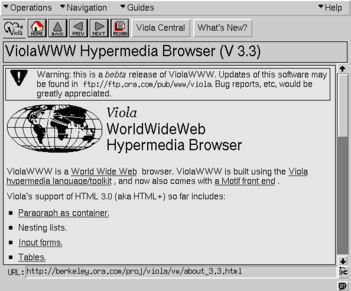

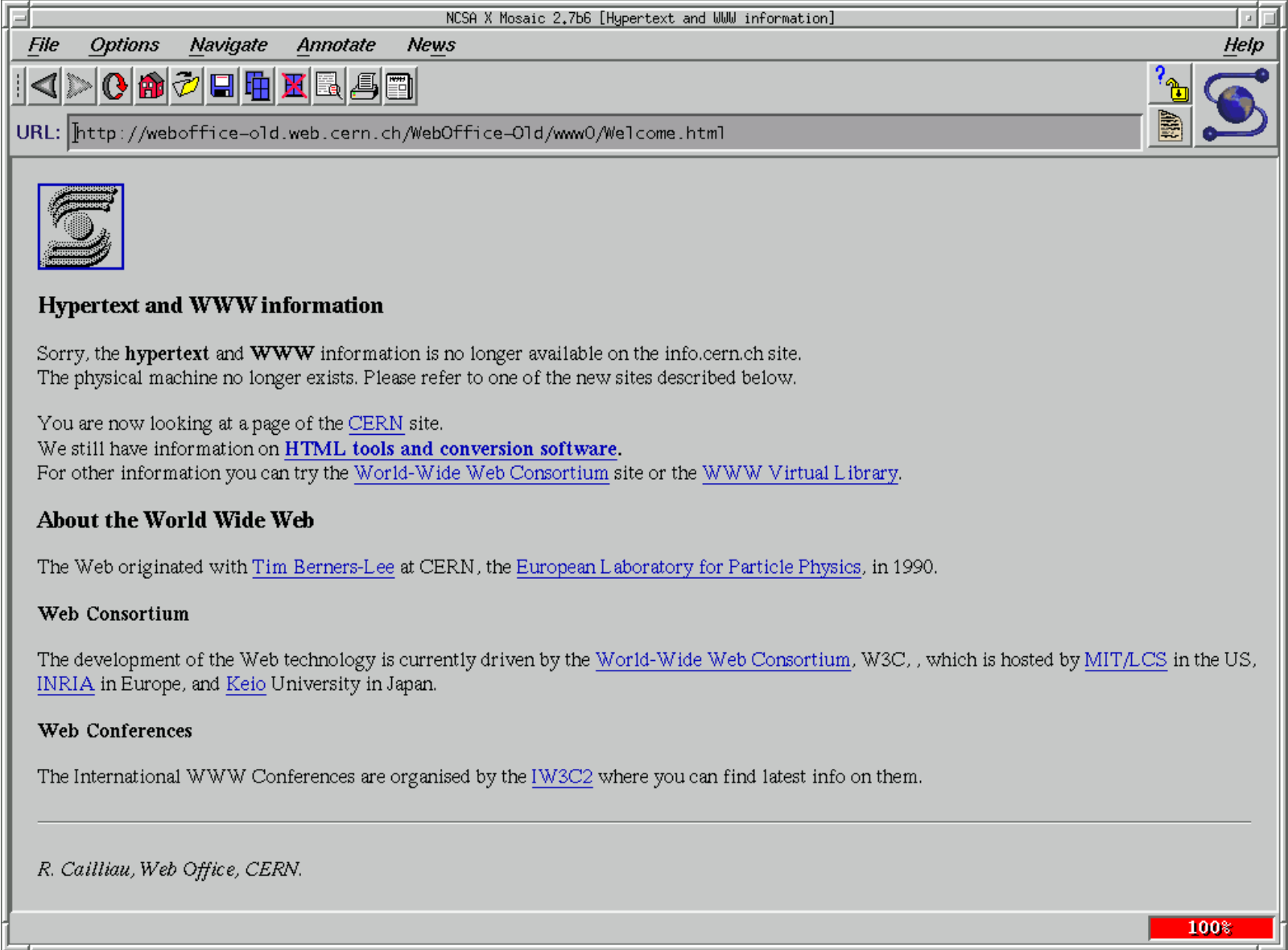

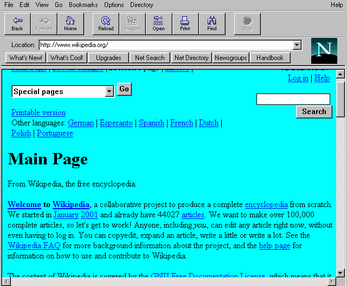

The first widely distributed browser may have been ViolaWWW (see Figure 5); this browser also pioneered multiple interesting features such as applets and images. It was in turn the inspiration for NCSA Mosaic (see Figure 6), which launched in 1993. One of the two original authors of Mosaic went on to co-found Netscape, which built Netscape Navigator (see Figure 7), the first commercial browser,By commercial I mean built by a for-profit entity. Netscape’s early versions were also not free software—you had to buy them from a store. They cost about $50. which launched in 1994. Feeling threatened, Microsoft launched Internet Explorer (see Figure 8) in 1995 and soon bundled it with Windows 95.

The era of the “first browser war” ensued: a competition between Netscape Navigator and Internet Explorer. There were also other browsers with smaller market shares; one notable example is Opera. The WebKit project began in 1999; Safari and Chromium-based browsers, such as Chrome and newer versions of Edge, descend from this codebase. Likewise, the Gecko rendering engine was originally developed by Netscape starting in 1997; the Firefox browser is descended from that codebase. During the first browser war, nearly all of the core features of this book’s simple browser were added, including CSS, DOM, and JavaScript.

The “second browser war”, which according to Wikipedia was 2004–2017,

was fought between a variety of browsers, in particular Internet

Explorer, Firefox, Safari, and Chrome. Initially, Safari and Chrome used

the same rendering engine, but Chrome forked into Blink in

2013, which Microsoft Edge adopted by 2020. The second browser war saw

the development of many features of the modern web, including widespread

use of AJAXAsynchronous

JavaScript and XML, where XML stands for eXtensible Markup

Language., HTML5 features like

<canvas>, and a huge explosion in third-party

JavaScript libraries and frameworks.

Web Standards

In parallel with these developments was another, equally important, one—the standardization of web APIs. In October 1994, the World Wide Web Consortium (W3C) was founded to provide oversight and standards for web features. Prior to this point, browsers would often introduce new HTML elements or APIs, and competing browsers would have to copy them. With a standards organization, those elements and APIs could subsequently be agreed upon and documented in specifications. (These days, an initial discussion, design, and specification precedes any new feature.) Later on, the HTML specification ended up moving to a different standards body called the WHATWG, but CSS and other features are still standardized at the W3C. JavaScript is standardized at yet another standards body, TC39 (Technical Committee 39) at ECMA. HTTP is standardized by the IETF. The important point is that the standards process set up in the mid-1990s is still with us.

In the first years of the web, it was not so clear that browsers would remain standard and that one browser might not end up “winning” and becoming another proprietary software platform. There are multiple reasons this didn’t happen, among them the egalitarian ethos of the computing community and the presence and strength of the W3C. Another important reason was the networked nature of the web, and therefore the necessity for web developers to make sure their pages worked correctly in most or all of the browsers (otherwise they would lose customers), leading them to avoid proprietary extensions. On the contrary, browsers worked hard to carefully reproduce each other’s undocumented behaviors—even bugs—to make sure they continued supporting the whole web.

There never really was a point where any browser openly attempted to break away from the standard, despite fears that that might happen.Perhaps the closest the web came to fragmenting was with the late-1990s introduction of features for DHTML—early versions of the Document Object Model you’ll learn about in this book. Netscape and Internet Explorer at first had incompatible implementations of these features, and it took years, the development of a common specification, and significant pressure campaigns on the browsers before standardization was achieved. You can read about this story in much more depth from Jay Hoffman. Instead, intense competition for market share was channeled into very fast innovation and an ever-expanding set of APIs and capabilities for the web, which we nowadays refer to as the web platform, not just the “World Wide Web”. This recognizes the fact that the web is no longer a document viewing mechanism, but has evolved into a fully realized computing platform and ecosystem.There have even been operating systems built around the web! Examples include webOS, which powered some Palm smartphones, Firefox OS (that today lives on in KaiOS-based phones), and ChromeOS, which is a desktop operating system. All of these operating systems are based on using the web as the UI layer for all applications, with some JavaScript-exposed APIs on top for system integration.

Given the outcomes—multiple competing browsers and well-developed standards—it is in retrospect not that relevant which browser “won” or “lost” each of the browser “wars”. In each case the web won, because it gained users and grew in capability.

Open Source

Another important and interesting outcome of the second browser war was that all mainstream browsers todayExamples of Chromium-based browsers include Chrome, Edge, Opera (which switched to Chromium from the Presto engine in 2013), Samsung Internet, Yandex Browser, UC Browser, and Brave. In addition, there are many “embedded” browsers, based on one or another of the three engines, for a wide variety of automobiles, phones, TVs, and other electronic devices. are based on three open-source web rendering / JavaScript engines: Chromium, Gecko, and WebKit.The JavaScript engines are actually in different repositories (as are various other subcomponents), and can and do get used outside the browser as JavaScript virtual machines. One important application is the use of V8 to power node.js. However, each of the three rendering engines does have a corresponding JavaScript implementation, so conflating the two is reasonable. Since Chromium and WebKit have a common ancestral codebase, while Gecko is an open-source descendant of Netscape, all three date back to the 1990s—almost to the beginning of the web.

This is not an accident, and in fact tells us something quite interesting about the most cost-effective way to implement a rendering engine based on a commodity set of platform APIs. For example, it’s common for independent developers, not paid by the company nominally controlling the browser, to contribute code and features. There are even companies and individuals that specialize in implementing browser features! It’s also common for features in one browser to copy code from another. And every major browser being open source feeds back into the standards process, reinforcing the web’s decentralized nature.

Summary

In summary, the history went like this:

Basic research was performed into ways to represent and explore information.

Once the necessary technology became mature enough, the web proper was proposed and implemented.

The web became popular quite quickly, and many browsers appeared in order to capitalize on the web’s opportunity.

Standards organizations were introduced in order to negotiate between the browsers and avoid proprietary control.

Competition between browsers grew their power and complexity at a rapid pace.

Browsers appeared on all devices and operating systems, from desktop to mobile to embedded.

Eventually, all web rendering engines became open source, as a recognition of their being a shared effort larger than any single entity.

The web has come a long way! But one thing seems clear: it isn’t done yet.

Exercises

iii-1 What comes next? Based on what you learned about how the web came about and took its current form, what trends do you predict for its future evolution? For example, do you think it’ll compete effectively against other non-web technologies and platforms?

iii-2 What became of the original ideas? The way the web works in practice is significantly different than the memex; one key difference is that there is no built-in way for the user of the web to add links between pages or notate them. Why do you think this is? Can you think of other goals from the original work that remain unrealized?

Downloading Web Pages

A web browser displays information identified by a URL. And the first step is to use that URL to connect to and download information from a server somewhere on the internet.

Connecting to a Server

Browsing the internet starts with a URL,“URL” stands for “uniform resource locator”, meaning that it is a portable (uniform) way to identify web pages (resources) and also that it describes how to access those files (locator). a short string that identifies a particular web page that the browser should visit.

http://example.org/index.html

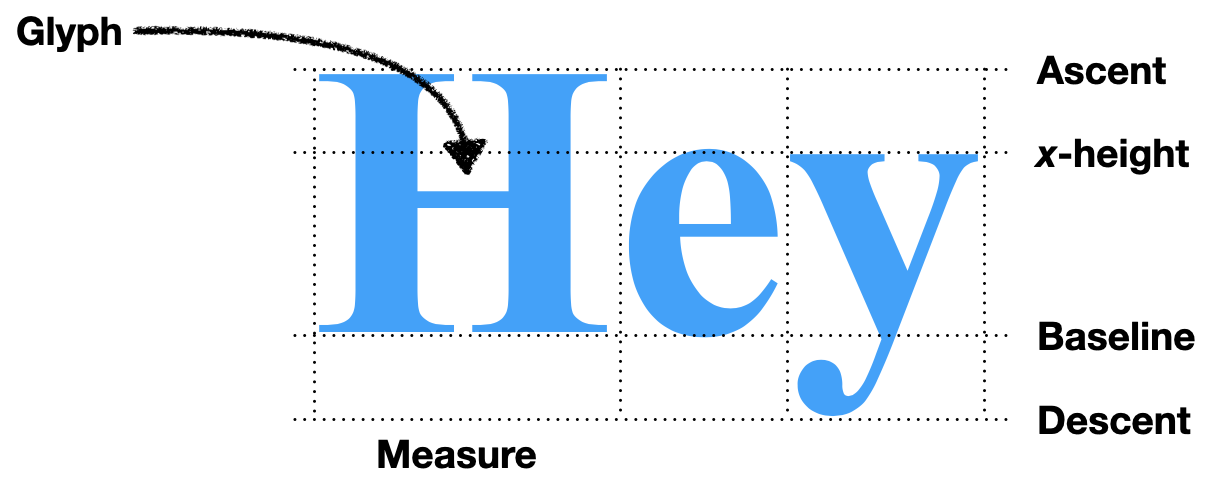

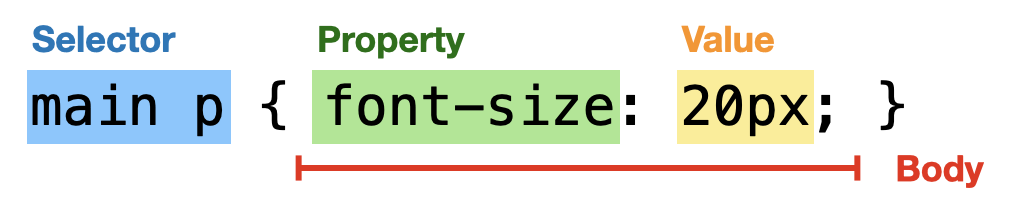

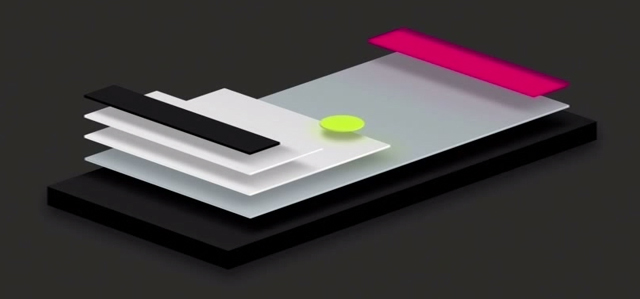

Figure 1: The syntax of URLs.

A URL has three parts (see Figure 1): the scheme explains how to get the information; the host name explains where to get it; and the path explains what information to get. There are also optional parts to the URL, like ports, queries, and fragments, which we’ll see later.

From a URL, the browser can start the process of downloading the web

page. The browser first asks the local operating system (OS) to put it

in touch with the server described by the host name.

The OS then talks to a Domain Name System (DNS) server which

convertsYou can use a

DNS lookup tool like nslookup.io or

the dig command to do this conversion

yourself. a host name like example.org into a

destination IP address like 93.184.216.34.Today there are two versions

of IP (Internet Protocol): IPv4 and IPv6. IPv6 addresses are a lot

longer and are usually written in hexadecimal, but otherwise the

differences don’t matter here. Then the OS decides which

hardware is best for communicating with that destination IP address

(say, wireless or wired) using what is called a routing table,

and then uses device drivers to send signals over a wire or over the

air.I’m skipping steps

here. On wires you first have to wrap communications in ethernet frames,

on wireless you have to do even more. I’m trying to be

brief. Those signals are picked up and transmitted by a

series of routersOr a switch, or an access point; there are a lot of

possibilities, but eventually there is a router. which

each choose the best direction to send your message so that it

eventually gets to the destination.They may also record where the message came from so they

can forward the reply back. When the message reaches the

server, a connection is created. Anyway, the point of this is that the

browser tells the OS, “Hey, put me in touch with

example.org”, and it does.

On many systems, you can set up this kind of connection using the

telnet program, like this:The “80” is the port,

discussed below.

telnet example.org 80(Note: When you see a gray outline, it means that the code in question is an example only, and not actually part of our browser’s code.)

You might need to install telnet; it is often disabled

by default. On Windows, go to Programs

and Features / Turn Windows features on or off in the Control Panel;

you’ll need to reboot. When you run it, it’ll clear the screen instead

of printing something, but other than that works normally. On macOS, you

can use the nc -v command as a replacement for

telnet:

nc -v example.org 80The output is a little different but it works in the same way. On

most Linux systems, you can install telnet or

nc from the package manager, usually from packages called

telnet and netcat.

You’ll get output that looks like this:

Trying 93.184.216.34...

Connected to example.org.

Escape character is '^]'.This means that the OS converted the host name

example.org into the IP address 93.184.216.34

and was able to connect to it.The line about escape characters is just instructions for

using obscure telnet features. You can now

talk to example.org.

The URL syntax is defined in RFC 3987, whose first author is Tim Berners-Lee—no surprise there! The second author is Roy Fielding, a key contributor to the design of HTTP and also well known for describing the Representational State Transfer (REST) architecture of the web in his Ph.D. thesis, which explains how REST allowed the web to grow in a decentralized way. Today, many services provide “RESTful APIs” that also follow these principles, though there does seem to be some confusion about it.

Requesting Information

Once it’s connected, the browser requests information from the server

by giving its path, the path being the part of a URL that comes

after the host name, like /index.html. The structure of the

request is shown in Figure 2. Type this into telnet to try

it.

GET /index.html HTTP/1.0 Host: example.org

Figure 2: An annotated HTTP GET request.

Here, the word GET means that the browser would like to

receive information,It

could say POST if it intended to send information, plus

there are some other, more obscure, options. then comes

the path, and finally there is the word HTTP/1.0 which

tells the host that the browser speaks version 1.0 of HTTP. There

are several versions of HTTP (0.9,

1.0, 1.1, 2.0, and 3.0). The HTTP 1.1 standard adds a variety of

useful features, like keep-alive, but in the interest of simplicity our

browser won’t use them. We’re also not implementing HTTP 2.0; it is much

more complex than the 1.x series, and is intended for large and

complex web applications, which our browser can’t run anyway.

After the first line, each line contains a header, which has

a name (like Host) and a value (like

example.org). Different headers mean different things; the

Host header, for example, tells the server who you think it

is.This is useful when

the same IP address corresponds to multiple host names and hosts

multiple websites (for example, example.com and

example.org). The Host header tells the server

which of multiple websites you want. These websites basically require

the Host header to function properly. Hosting multiple

domains on a single computer is very common. There are

lots of other headers one could send, but let’s stick to just

Host for now.

Finally, after the headers comes a single blank line; that tells the

host that you are done with headers. So type a blank line into

telnet (hit Enter twice after typing the two lines of the

request) and you should get a response from

example.org.

HTTP/1.0 is standardized in RFC 1945, and HTTP/1.1 in

RFC 2616. HTTP was

designed to be simple to understand and implement, making it easy for

any kind of computer to adopt it. It’s no coincidence that you can type

HTTP directly into telnet! Nor is it an accident that HTTP

is a “line-based protocol”, using plain text and newlines, similar to

the Simple Mail Transfer Protocol (SMTP)

for email. Ultimately, the whole pattern derives from early computers

only having line-based text input. In fact, one of the first two

browsers had a line-mode

UI.

The Server’s Response

The server’s response starts with the line in Figure 3.

HTTP/1.0 200 OK

Figure 3: Annotated first line of an HTTP response.

This tells you that the host confirms that it, too, speaks

HTTP/1.0, and that it found your request to be “OK” (which

has a numeric code of 200). You may be familiar with

404 Not Found; that’s another numeric code and response, as

are 403 Forbidden or 500 Server Error. There

are lots of these codes, and they have a pretty neat organization

scheme:The status text

like OK can actually be anything and is just there for

humans, not for machines.

- the 100s are informational messages;

- the 200s mean you were successful;

- the 300s request follow-up action (usually a redirect);

- the 400s mean you sent a bad request;

- the 500s mean the server handled the request badly.

Note the genius of having two sets of error codes (400s and 500s) to tell you who is at fault, the server or the browser.More precisely, who the server thinks is at fault. You can find a full list of the different codes on Wikipedia, and new ones do get added here and there.

After the 200 OK line, the server sends its own headers.

When I did this, I got these headers (but yours will differ):

Age: 545933

Cache-Control: max-age=604800

Content-Type: text/html; charset=UTF-8

Date: Mon, 25 Feb 2019 16:49:28 GMT

Etag: "1541025663+gzip+ident"

Expires: Mon, 04 Mar 2019 16:49:28 GMT

Last-Modified: Fri, 09 Aug 2013 23:54:35 GMT

Server: ECS (sec/96EC)

Vary: Accept-Encoding

X-Cache: HIT

Content-Length: 1270

Connection: closeThere is a lot here, about the information you are

requesting (Content-Type, Content-Length, and

Last-Modified), about the server (Server,

X-Cache), about how long the browser should cache this

information (Cache-Control, Expires,

Etag), and about all sorts of other stuff. Let’s move on

for now.

After the headers there is a blank line followed by a bunch of HTML code.

This is called the body of the server’s response, and your

browser knows that it is HTML because of the Content-Type

header, which says that it is text/html. It’s this HTML

code that contains the content of the web page itself.

The HTTP request/response transaction is summarized in Figure 4. Let’s now switch gears from making manual connections to Python.

Wikipedia has nice lists of HTTP headers and response codes. Some of the HTTP response codes are almost never used, like 402 “Payment Required”. This code was intended to be used for “digital cash or (micro) payment systems”. While e-commerce is alive and well without the response code 402, micropayments have not (yet?) gained much traction, even though many people (including me!) think they are a good idea.

Telnet in Python

So far we’ve communicated with another computer using

telnet. But it turns out that telnet is quite

a simple program, and we can do the same programmatically. It’ll require

extracting the host name and path from the URL, creating a

socket, sending a request, and receiving a response.In Python, there’s a library

called urllib.parse for parsing URLs, but I think

implementing our own will be good for learning. Plus, it makes this book

less Python-specific.

Let’s start with parsing the URL. I’m going to make parsing a URL

return a URL object, and I’ll put the parsing code into the

constructor:

class URL:

def __init__(self, url):

# ...The __init__ method is Python’s peculiar syntax for

class constructors, and the self parameter, which you must

always make the first parameter of any method, is Python’s analog of

this in C++ or Java.

Let’s start with the scheme, which is separated from the rest of the

URL by ://. Our browser only supports http, so

let’s check that, too:

class URL:

def __init__(self, url):

self.scheme, url = url.split("://", 1)

assert self.scheme == "http"Now we must separate the host from the path. The host comes before

the first /, while the path is that slash and everything

after it:

class URL:

def __init__(self, url):

# ...

if "/" not in url:

url = url + "/"

self.host, url = url.split("/", 1)

self.path = "/" + url(When you see a code block with a # ..., like this one,

that means you’re adding code to an existing method or block.) The

split(s, n) method splits a string at the first

n copies of s. Note that there’s some tricky

logic here for handling the slash between the host name and the path.

That (optional) slash is part of the path.

Now that the URL has the host and

path fields, we can download the web page at that URL.

We’ll do that in a new method, request:

class URL:

def request(self):

# ...Note that you always need to write the self parameter

for methods in Python. In the future, I won’t always make such a big

deal out of defining a method—if you see a code block with code in a

method or function that doesn’t exist yet, that means we’re defining

it.

The first step to downloading a web page is connecting to the host. The operating system provides a feature called “sockets” for this. When you want to talk to other computers (either to tell them something, or to wait for them to tell you something), you create a socket, and then that socket can be used to send information back and forth. Sockets come in a few different kinds, because there are multiple ways to talk to other computers:

- A socket has an address family, which tells you how to find

the other computer. Address families have names that begin with

AF. We wantAF_INET, but for exampleAF_BLUETOOTHis another. - A socket has a type, which describes the sort of

conversation that’s going to happen. Types have names that begin with

SOCK. We wantSOCK_STREAM, which means each computer can send arbitrary amounts of data, but there’s alsoSOCK_DGRAM, in which case they send each other packets of some fixed size.DGRAMstands for “datagram”, which I imagine to be like a postcard. - A socket has a protocol, which describes the steps by which

the two computers will establish a connection. Protocols have names that

depend on the address family, but we want

IPPROTO_TCP.Newer versions of HTTP use something called QUIC instead of the Transmission Control Protocol (TCP), but our browser will stick to HTTP 1.0.

By picking all of these options, we can create a socket like so:While this code uses the

Python socket library, your favorite language likely

contains a very similar library; the API is basically standardized. In

Python, the flags we pass are defaults, so you can actually call

socket.socket(); I’m keeping the flags here in case you’re

following along in another language.

import socket

class URL:

def request(self):

s = socket.socket(

family=socket.AF_INET,

type=socket.SOCK_STREAM,

proto=socket.IPPROTO_TCP,

)Once you have a socket, you need to tell it to connect to the other computer. For that, you need the host and a port. The port depends on the protocol you are using; for now it should be 80.

class URL:

def request(self):

# ...

s.connect((self.host, 80))This talks to example.org to set up the connection and

prepare both computers to exchange data.

Naturally this won’t work if you’re offline. It also might not work if you’re behind a proxy, or in a variety of more complex networking environments. The workaround will depend on your setup—it might be as simple as disabling your proxy, or it could be much more complex.

Note that there are two parentheses in the connect call:

connect takes a single argument, and that argument is a

pair of a host and a port. This is because different address families

have different numbers of arguments.

The “sockets” API, which Python more or less implements directly, derives from the original “Berkeley sockets” API design for 4.2 BSD Unix in 1983. Of course, Windows and Linux merely reimplement the API, but macOS and iOS actually do still use large amounts of code descended from BSD Unix.

Request and Response

Now that we have a connection, we make a request to the other server.

To do so, we send it some data using the send method:

class URL:

def request(self):

# ...

request = "GET {} HTTP/1.0\r\n".format(self.path)

request += "Host: {}\r\n".format(self.host)

request += "\r\n"

s.send(request.encode("utf8"))The send method just sends the request to the

server.send

actually returns a number, in this case 47. That tells you

how many bytes of data you sent to the other computer; if, say, your

network connection failed midway through sending the data, you might

want to know how much you sent before the connection

failed. There are a few things in this code that have to

be exactly right. First, it’s very important to use \r\n

instead of \n for newlines. It’s also essential that you

put two \r\n newlines at the end, so that you send

that blank line at the end of the request. If you forget that, the other

computer will keep waiting on you to send that newline, and you’ll keep

waiting on its response.Computers are endlessly literal-minded.

Also note the encode call. When you send data, it’s

important to remember that you are sending raw bits and bytes; they

could form text or an image or video. But a Python string is

specifically for representing text. The encode method

converts text into bytes, and there’s a corresponding

decode method that goes the other way.When you call

encode and decode you need to tell the

computer what character encoding you want it to use. This is a

complicated topic. I’m using utf8 here, which is a common

character encoding and will work on many pages, but in the real world

you would need to be more careful. Python reminds you to

be careful by giving different types to text and to bytes:

>>> type("text")

<class 'str'>

>>> type("text".encode("utf8"))

<class 'bytes'>If you see an error about str versus bytes,

it’s because you forgot to call encode or

decode somewhere.

To read the server’s response, you could use the read

function on sockets, which gives whatever bits of the response have

already arrived. Then you write a loop to collect those bits as they

arrive. However, in Python you can use the makefile helper

function, which hides the loop:If you’re in another language, you might only have

socket.read available. You’ll need to write the loop,

checking the socket status, yourself.

class URL:

def request(self):

# ...

response = s.makefile("r", encoding="utf8", newline="\r\n")Here, makefile returns a file-like object containing

every byte we receive from the server. I am instructing Python to turn

those bytes into a string using the utf8 encoding,

or method of associating bytes to letters.Hard-coding utf8

is not correct, but it’s a shortcut that will work alright on most

English-language websites. In fact, the Content-Type header

usually contains a charset declaration that specifies the

encoding of the body. If it’s absent, browsers still won’t default to

utf8; they’ll guess, based on letter frequencies, and you

will see ugly � strange áççêñ£ß when they guess wrong. I’m

also informing Python of HTTP’s weird line endings.

Let’s now split the response into pieces. The first line is the status line:I could have asserted that 200 is required, since that’s the only code our browser supports, but it’s better to just let the browser render the returned body, because servers will generally output a helpful and user-readable HTML error page even for error codes. This is another way in which the web is easy to implement incrementally.

class URL:

def request(self):

# ...

statusline = response.readline()

version, status, explanation = statusline.split(" ", 2)Note that I do not check that the server’s version of HTTP is the same as mine; this might sound like a good idea, but there are a lot of misconfigured servers out there that respond in HTTP 1.1 even when you talk to them in HTTP 1.0.Luckily the protocols are similar enough to not cause confusion.

After the status line come the headers:

class URL:

def request(self):

# ...

response_headers = {}

while True:

line = response.readline()

if line == "\r\n": break

header, value = line.split(":", 1)

response_headers[header.casefold()] = value.strip()For the headers, I split each line at the first colon and fill in a

map of header names to header values. Headers are case-insensitive, so I

normalize them to lower case.I used casefold

instead of lower, because it works better for more

languages. Also, whitespace is insignificant in HTTP

header values, so I strip off extra whitespace at the beginning and

end.

Headers can describe all sorts of information, but a couple of headers are especially important because they tell us that the data we’re trying to access is being sent in an unusual way. Let’s make sure none of those are present.Exercise 1-9 describes how your browser should handle these headers if they are present.

class URL:

def request(self):

# ...

assert "transfer-encoding" not in response_headers

assert "content-encoding" not in response_headersThe usual way to get the sent data, then, is everything after the headers:

class URL:

def request(self):

# ...

content = response.read()

s.close()It’s the body that we’re going to display, so let’s return that:

class URL:

def request(self):

# ...

return contentNow let’s actually display the text in the response body.

The Content-Encoding

header lets the server compress web pages before sending them. Large,

text-heavy web pages compress well, and as a result the page loads

faster. The browser needs to send an Accept-Encoding

header in its request to list the compression algorithms it

supports. Transfer-Encoding

is similar and also allows the data to be “chunked”, which many servers

seem to use together with compression.

Displaying the HTML

The HTML code in the response body defines the content you see in

your browser window when you go to

http://example.org/index.html. I’ll be talking much, much

more about HTML in future chapters, but for now let me keep it very

simple.

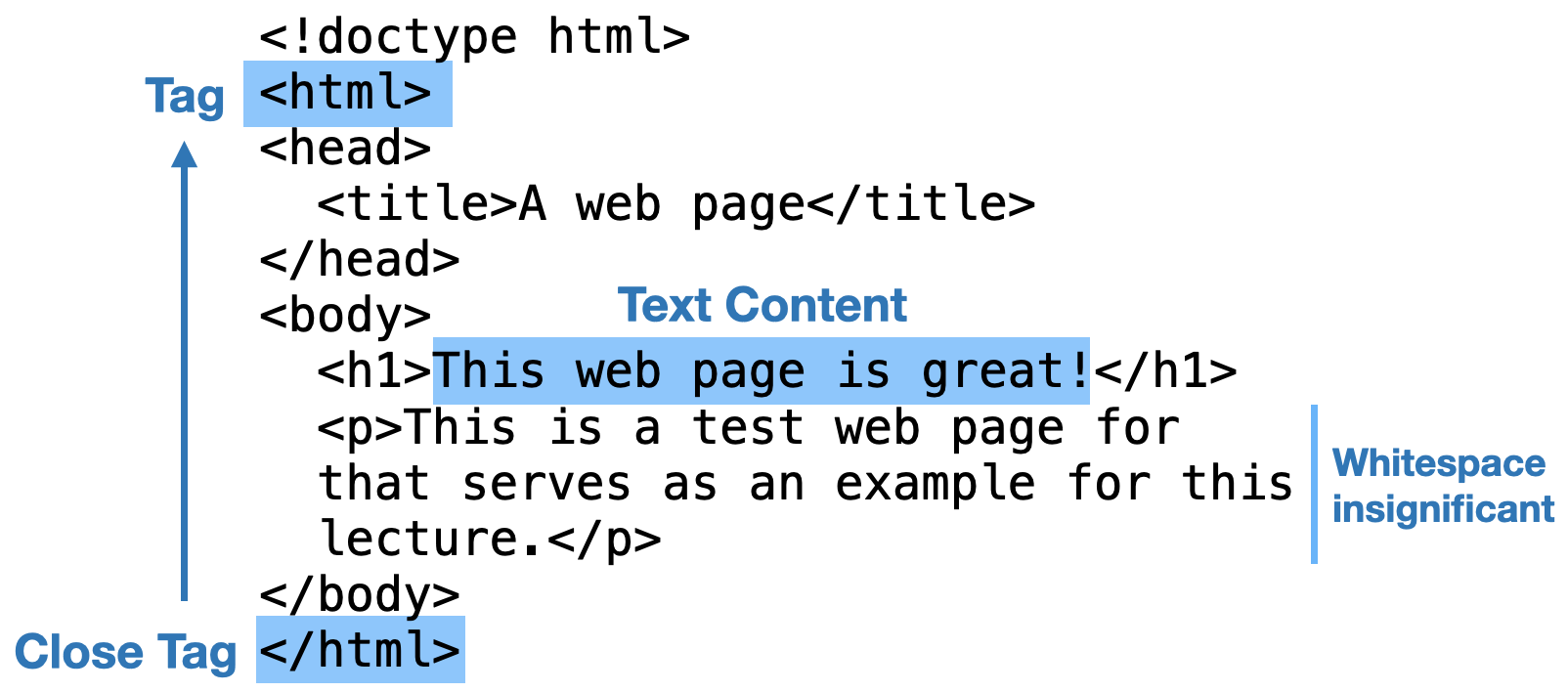

In HTML, there are tags and text. Each tag starts

with a < and ends with a >; generally

speaking, tags tell you what kind of thing some content is, while text

is the actual content.That said, some tags, like img, are content,

not information about it. Most tags come in pairs of a

start and an end tag; for example, the title of the page is enclosed in

a pair of tags: <title> and

</title>. Each tag, inside the angle brackets, has a

tag name (like title here), and then optionally a space

followed by attributes, and its pair has a /

followed by the tag name (and no attributes).

So, to create our very, very simple web browser, let’s take the page

HTML and print all the text, but not the tags, in it.If this example causes Python

to produce a SyntaxError pointing to the end

on the last line, it is likely because you are running Python 2 instead

of Python 3. Make sure you are using Python 3. I’ll do

this in a new function, show:Note that this is a global

function and not in the URL class.

def show(body):

in_tag = False

for c in body:

if c == "<":

in_tag = True

elif c == ">":

in_tag = False

elif not in_tag:

print(c, end="")This code is pretty complex. It goes through the request body

character by character, and it has two states: in_tag, when

it is currently between a pair of angle brackets, and

not in_tag. When the current character is an angle bracket,

it changes between those states; normal characters, not inside a tag,

are printed.The

end argument tells Python not to print a newline after the

character, which it otherwise would.

We can now load a web page just by stringing together

request and show:Like show, this

is a global function.

def load(url):

body = url.request()

show(body)Add the following code to run load from the command

line:

if __name__ == "__main__":

import sys

load(URL(sys.argv[1]))The first line is Python’s version of a main function,

run only when executing this script from the command line. The code

reads the first argument (sys.argv[1]) from the command

line and uses it as a URL. Try running this code on the URL

http://example.org/:

python3 browser.py http://example.org/You should see some short text welcoming you to the official example web page. You can also try using it on this chapter!

HTML, just like URLs and HTTP, is designed to be very easy to parse and display at a basic level. And in the beginning there were very few features in HTML, so it was possible to code up something not so much more fancy than what you see here, yet still display the content in a usable way. Even our super simple and basic HTML parser can already print out the text of the browser.engineering website.

Encrypted Connections

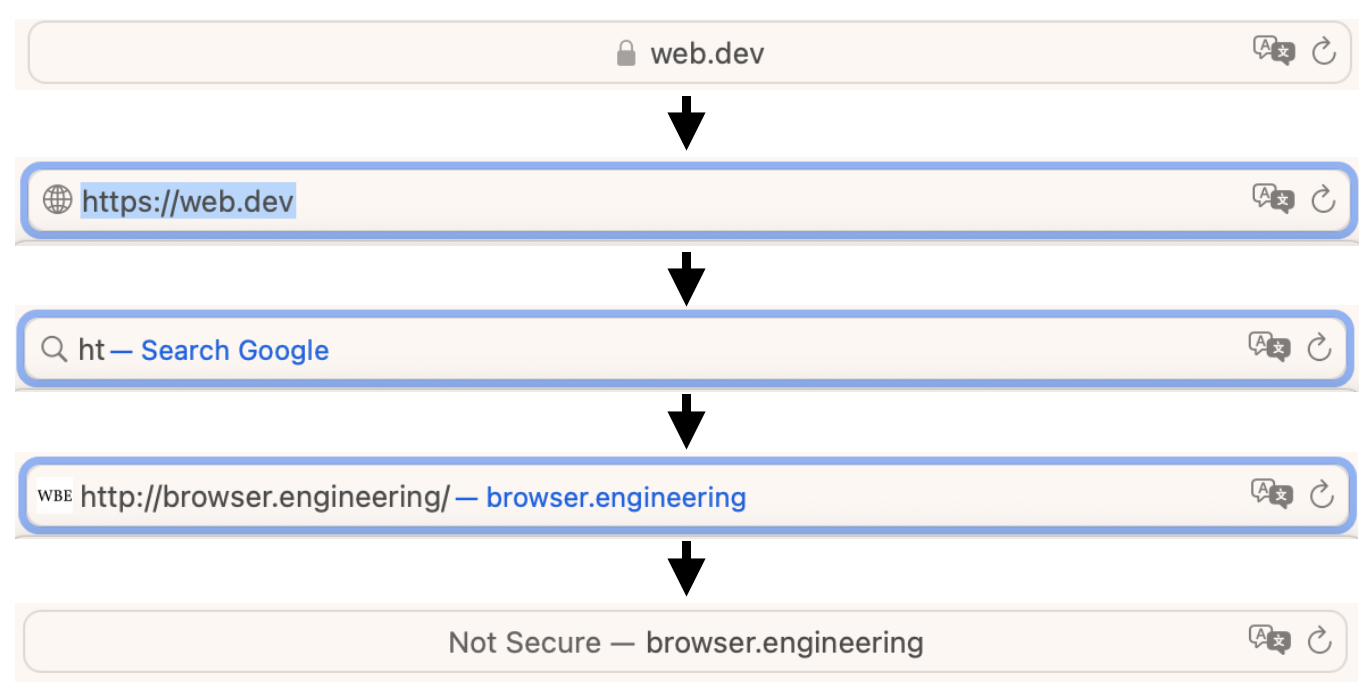

So far, our browser supports the http scheme. That’s a

pretty common scheme. But more and more websites are migrating to the

https scheme, and many websites require it.

The difference between http and https is

that https is more secure—but let’s be a little more

specific. The https scheme, or more formally HTTP over TLS

(Transport Layer Security), is identical to the normal http

scheme, except that all communication between the browser and the host

is encrypted. There are quite a few details to how this works: which

encryption algorithms are used, how a common encryption key is agreed

to, and of course how to make sure that the browser is connecting to the

correct host. The difference in the protocol layers involved is shown in

Figure 5.

Luckily, the Python ssl library implements all of these

details for us, so making an encrypted connection is almost as easy as

making a regular connection. That ease of use comes with accepting some

default settings which could be inappropriate for some situations, but

for teaching purposes they are fine.

Making an encrypted connection with ssl is pretty easy.

Suppose you’ve already created a socket, s, and connected

it to example.org. To encrypt the connection, you use

ssl.create_default_context to create a context

ctx and use that context to wrap the socket

s:

import ssl

ctx = ssl.create_default_context()

s = ctx.wrap_socket(s, server_hostname=host)Note that wrap_socket returns a new socket, which I save

back into the s variable. That’s because you don’t want to

send any data over the original socket; it would be unencrypted and also

confusing. The server_hostname argument is used to check

that you’ve connected to the right server. It should match the

Host header.

On macOS, you’ll need to run

a program called “Install Certificates” before you can use Python’s

ssl package on most websites.

Let’s try to take this code and add it to request.

First, we need to detect which scheme is being used:

import ssl

class URL:

def __init__(self, url):

self.scheme, url = url.split("://", 1)

assert self.scheme in ["http", "https"]

# ...(Note that here you’re supposed to replace the existing scheme parsing code with this new code. It’s usually clear from context, and the code itself, what you need to replace.)

Encrypted HTTP connections usually use port 443 instead of port 80:

class URL:

def __init__(self, url):

# ...

if self.scheme == "http":

self.port = 80

elif self.scheme == "https":

self.port = 443We can use that port when creating the socket:

class URL:

def request(self):

# ...

s.connect((self.host, self.port))

# ...Next, we’ll wrap the socket with the ssl library:

class URL:

def request(self):

# ...

s.connect((self.host, self.port))

if self.scheme == "https":

ctx = ssl.create_default_context()

s = ctx.wrap_socket(s, server_hostname=self.host)

# ...Your browser should now be able to connect to HTTPS sites.

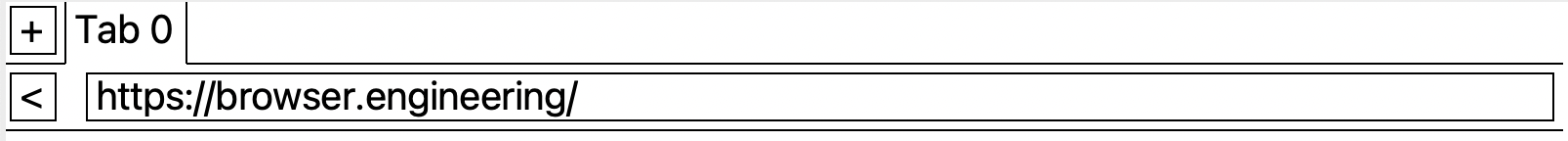

While we’re at it, let’s add support for custom ports, which are specified in a URL by putting a colon after the host name, as in Figure 6.

http://example.org:8080/index.html

Figure 6: Where the port goes in a URL.

If the URL has a port we can parse it out and use it:

class URL:

def __init__(self, url):

# ...

if ":" in self.host:

self.host, port = self.host.split(":", 1)

self.port = int(port)Custom ports are handy for debugging. Python has a built-in web server you can use to serve files on your computer. For example, if you run

python3 -m http.server 8000 -d /some/directorythen going to http://localhost:8000/ should show you all

the files in that directory. This is a good way to test your

browser.

TLS is pretty complicated. You can read the details in RFC 8446, but implementing your own is not recommended. It’s very difficult to write a custom TLS implementation that is not only correct but secure.

At this point you should be able to run your program on any web page. Here is what it should output for a simple example:

This is a simple

web page with some

text in it.

Summary

This chapter went from an empty file to a rudimentary web browser that can:

- parse a URL into a scheme, host, port, and path;

- connect to that host using the

socketandssllibraries; - send an HTTP request to that host, including a

Hostheader; - split the HTTP response into a status line, headers, and a body;

- print the text (and not the tags) in the body.

Yes, this is still more of a command-line tool than a web browser, but it already has some of the core capabilities of a browser.

Outline

The complete set of functions, classes, and methods in our browser should look something like this:

class URL:

def __init__(url)

def request()

def show(body)

def load(url)

Exercises

1-1 HTTP/1.1. Along with Host, send the

Connection header in the request function with

the value close. Your browser can now declare that it is

using HTTP/1.1. Also add a User-Agent header.

Its value can be whatever you want—it identifies your browser to the

host. Make it easy to add further headers in the future.

1-2 File URLs. Add support for the file scheme,

which allows the browser to open local files. For example,

file:///path/goes/here should refer to the file on your

computer at location /path/goes/here. Also make it so that,

if your browser is started without a URL being given, some specific file

on your computer is opened. You can use that file for quick testing.

1-3 data. Yet another scheme is

data, which allows inlining HTML content into the URL

itself. Try navigating to data:text/html,Hello world! in a

real browser to see what happens. Add support for this scheme to your

browser. The data scheme is especially convenient for

making tests without having to put them in separate files.

1-4 Entities. Implement support for the less-than

(<) and greater-than (>)

entities. These should be printed as < and

>, respectively. For example, if the HTML response was

<div>, the show method of your

browser should print <div>. Entities allow web pages

to include these special characters without the browser interpreting

them as tags.

1-5 view-source. Add support for the

view-source scheme; navigating to

view-source:http://example.org/ should show the HTML source

instead of the rendered page. Add support for this scheme. Your browser

should print the entire HTML file as if it was text. You’ll want to have

also implemented Exercise 1-4.

1-6 Keep-alive. Implement Exercise 1-1; however, do not send

the Connection: close header (send

Connection: keep-alive instead). When reading the body from

the socket, only read as many bytes as given in the

Content-Length header and don’t close the socket afterward.

Instead, save the socket, and if another request is made to the same

server reuse the same socket instead of creating a new one. (You’ll also

need to pass the "rb" option to makefile or

the value reported by Content-Length might not match the

length of the string you’re reading.) This will speed up repeated

requests to the same server, which are common.

1-7 Redirects. Error codes in the 300 range request a

redirect. When your browser encounters one, it should make a new request

to the URL given in the Location header. Sometimes the

Location header is a full URL, but sometimes it skips the

host and scheme and just starts with a / (meaning the same

host and scheme as the original request). The new URL might itself be a

redirect, so make sure to handle that case. You don’t, however, want to

get stuck in a redirect loop, so make sure to limit how many redirects

your browser can follow in a row. You can test this with the URL http://browser.engineering/redirect, which redirects

back to this page, and its /redirect2 and /redirect3 cousins which

do more complicated redirect chains.

1-8 Caching. Typically, the same images, styles, and scripts

are used on multiple pages; downloading them repeatedly is a waste. It’s

generally valid to cache any HTTP response, as long as it was requested

with GET and received a 200 response.Some other status codes like

301 and 404 can also be cached.

Implement a cache in your browser and test it by requesting the same

file multiple times. Servers control caches using the

Cache-Control header. Add support for this header,

specifically for the no-store and max-age

values. If the Cache-Control header contains any value

other than these two, it’s best not to cache the response.

1-9 Compression. Add support for HTTP compression, in which

the browser informs

the server that compressed data is acceptable. Your browser must

send the Accept-Encoding header with the value

gzip. If the server supports compression, its response will

have a Content-Encoding header with value

gzip. The body is then compressed. Add support for this

case. To decompress the data, you can use the decompress

method in the gzip module. GZip data is not

utf8-encoded, so pass "rb" to

makefile to work with raw bytes instead. Most web servers

send compressed data in a Transfer-Encoding called chunked.There are also a couple of

Transfer-Encodings that compress the data. They aren’t

commonly used. You’ll need to add support for that,

too.

Drawing to the Screen

A web browser doesn’t just download a web page; it also has to show

that page to the user. In the twenty-first century, that means a

graphical application.There are some obscure text-based browsers: I used

w3m as my main browser for most of 2011. I don’t

anymore. So in this chapter we’ll equip our browser with a

graphical user interface.

Creating Windows

Desktop and laptop computers run operating systems that provide desktop environments: windows, buttons, and a mouse. So responsibility ends up split: programs control their windows, but the desktop environment controls the screen. Therefore:

- The program asks for a new window and the desktop environment actually displays it.

- The program draws to its window and the desktop environment puts that on the screen.

- The desktop environment tells the program about clicks and key presses, and the program responds and redraws its window.

Doing all of this by hand is a bit of a drag, so programs usually use

a graphical toolkit to simplify these steps. Python comes with

a graphical toolkit called Tk in the Python package

tkinter.The

library is called Tk, and it was originally written for a different

language called Tcl. Python contains an interface to it, hence the

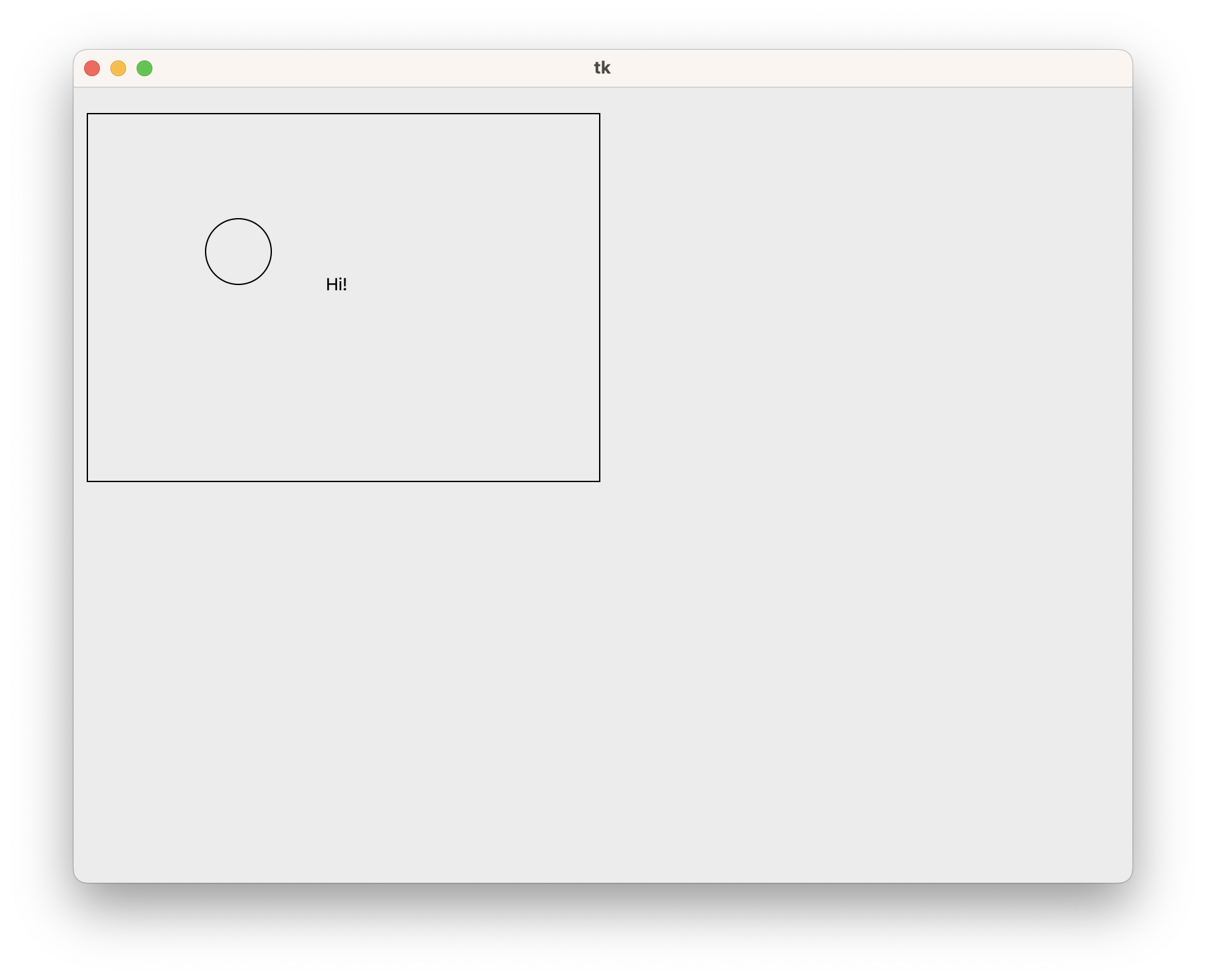

name. Using it is quite simple:

import tkinter

window = tkinter.Tk()

tkinter.mainloop()Here, tkinter.Tk() asks the desktop environment to

create a window and returns an object that you can use to draw to the

window. The tkinter.mainloop() call enters a loop that

looks like this:This

pseudocode may look like an infinite loop that locks up the computer,

but it’s not. Either the operating system will multitask among threads

and processes, or the pendingEvents call will sleep until

events are available, or both; in any case, other code will run and

create events for the loop to respond to.

while True:

for evt in pendingEvents():

handleEvent(evt)

drawScreen()

Here, pendingEvents first asks the desktop environment

for recent mouse clicks or key presses, then handleEvent

calls your application to update state, and then drawScreen

redraws the window. This event loop pattern (see Figure 1) is

common in many applications, from web browsers to video games, because

in complex graphical applications it ensures that all events are

eventually handled and the screen is eventually updated.

Although you’re probably writing your browser on a desktop computer, many people access the web through mobile devices such as phones or tablets. On mobile devices there’s still a screen, a rendering loop, and most other things discussed in this book.For example, most real browsers have both desktop and mobile editions, and the rendering engine code is almost exactly the same for both.

But there are several differences worth noting. Applications are

usually full-screen, with only one application drawing to the screen at